Thai PBS Verify found the source of fake news on: Facebook

On January 14, 2026, it was reported that, in Sikhio District, Nakhon Rachasima, a construction crane from the Thai‑Chinese high‑speed rail project collapsed onto Special Express Train No. 21 on the Bangkok–Ubon Ratchathani route, causing injuries and fatalities. At the same time, social media users widely shared an image resembling the accident, showing bodies covered with cloth lying on the railway track. The post was accompanied by the caption: “May the 22 souls rest in peace. May they achieve a better realm.”

The image gained more than 120,000 likes and was shared over 16,000 times. Yet it was circulated without a clear source, date, or time stamp. No official agencies or media outlets reporting from the scene released this photo. This raised strong doubts about its authenticity whether it may have been created or manipulated by artificial intelligence (AI).

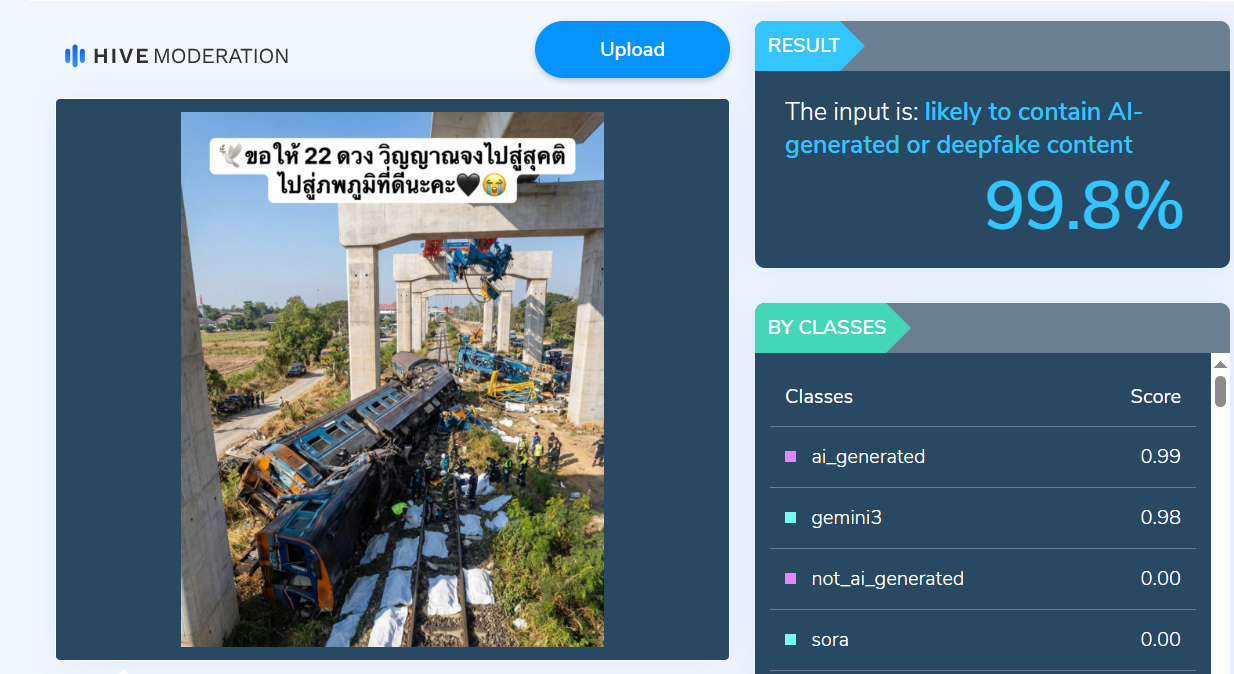

Post claiming to be an image of the crane collapse tragedy

Was the image AI‑generated?

When the viral photo was examined using Google Lens, the “About this image” section indicated that the picture had been created with Google’s artificial intelligence (AI).

When the viral photo was examined using Google Lens, the “About this image” section indicated that the picture had been created with Google’s AI.

In addition, when the photo was analyzed using an AI‑image detection tool from the Hive Moderation website, the results also indicated that the picture was likely generated by artificial intelligence.

Analysis with Hive Moderation found a 99.8% likelihood the photo was AI‑created.

Comparing the real train and the AI Image

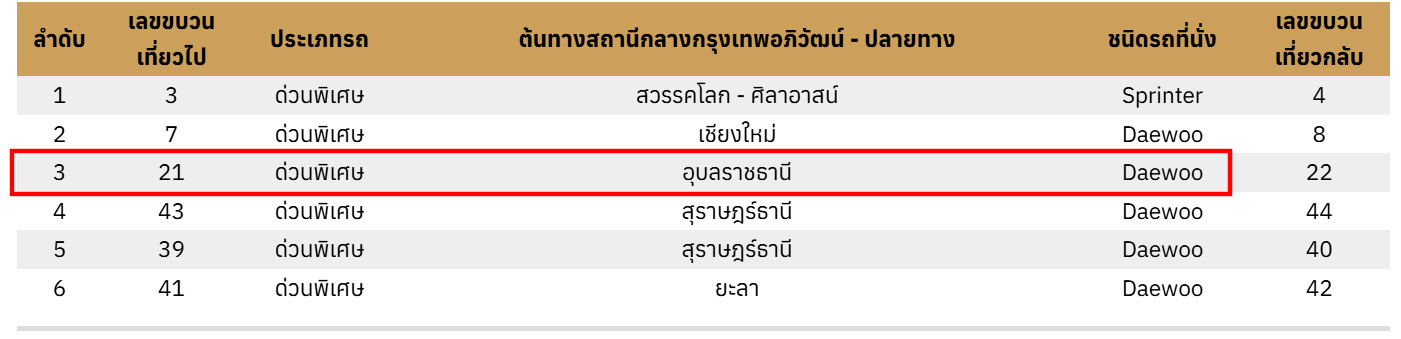

A review of official reports confirmed that the train involved in the accident was Special Express No. 21. According to information on the State Railway of Thailand’s website, the passenger train operating on this route was a Daewoo‑type train.

The train in the incident, Special Express No. 21 Bangkok–Ubon Ratchathani, was identified by the State Railway of Thailand as a Daewoo‑type passenger train.

The Daewoo passenger train involved in the accident (top) has doors at the front and rear of each carriage, whereas the ATR diesel railcar (bottom image) features mid‑carriage doors, matching the train shown in the viral social media photo.

The structural differences indicate that the spread photo was not that from the real incident, but generated by AI.

The AI‑generated image (top) shows a train with doors in the middle of the carriage, while the real accident photo (bottom) shows a Daewoo train with doors at the ends of the carriages.

Another observation concerns the placement of victims’ bodies. In the real incident, the bodies were not left on the railway tracks but were moved outside the crash site.

The AI‑generated image shows bodies lying in rows along the railway tracks.

Image showing the placement of victims’ bodies from the real incident, photographed by the news team in Sikhio District, Nakhon Ratchasima.”

State Railway of Thailand confirms that the image is fake

Methapat Sunthorawaraphak, Director of the SRT Public Relations, confirmed that the train photo circulating on social media is not from the real incident.

The SRT also told Thai PBS Verify that the train involved in the accident was a Daewoo passenger train, purchased from South Korea.

By contrast, the image identified as AI‑generated shows structural features resembling an ATR diesel railcar, which the SRT purchased from Japan — a model not involved in the accident.

What is the truth

The incident in which a construction crane from the Thai–Chinese high‑speed rail project collapsed onto Special Express Train No. 21 in Sikhio District, Nakhon Ratchasima, on January 14, 2026, was real and resulted in injuries and fatalities.

However, widely shared on social media, the aerial photo of a wrecked train with covered bodies lying on the tracks did not come from the actual event. An AI‑image detection tool confirmed that the photo was AI‑generated. Moreover, the train’s features and the placement of the bodies in the image did not match official data or real photos from the incident. The State Railway of Thailand has also confirmed that the circulated photo is fake.